Your Guide to Data Governance in an AI-Driven World

April 13, 2025

Balancing AI’s Energy, Security and Scalability Needs

April 13, 2025Dell Tackles AI Infrastructure With Disaggregated Servers And Storage

AI Infrastructure

t is funny how companies can find money – lots of money – when they think IT infrastructure spending can save them money, make them money, or do both at the same time. This is the hope with AI, and everyone is trying to benefit from it in those ways.

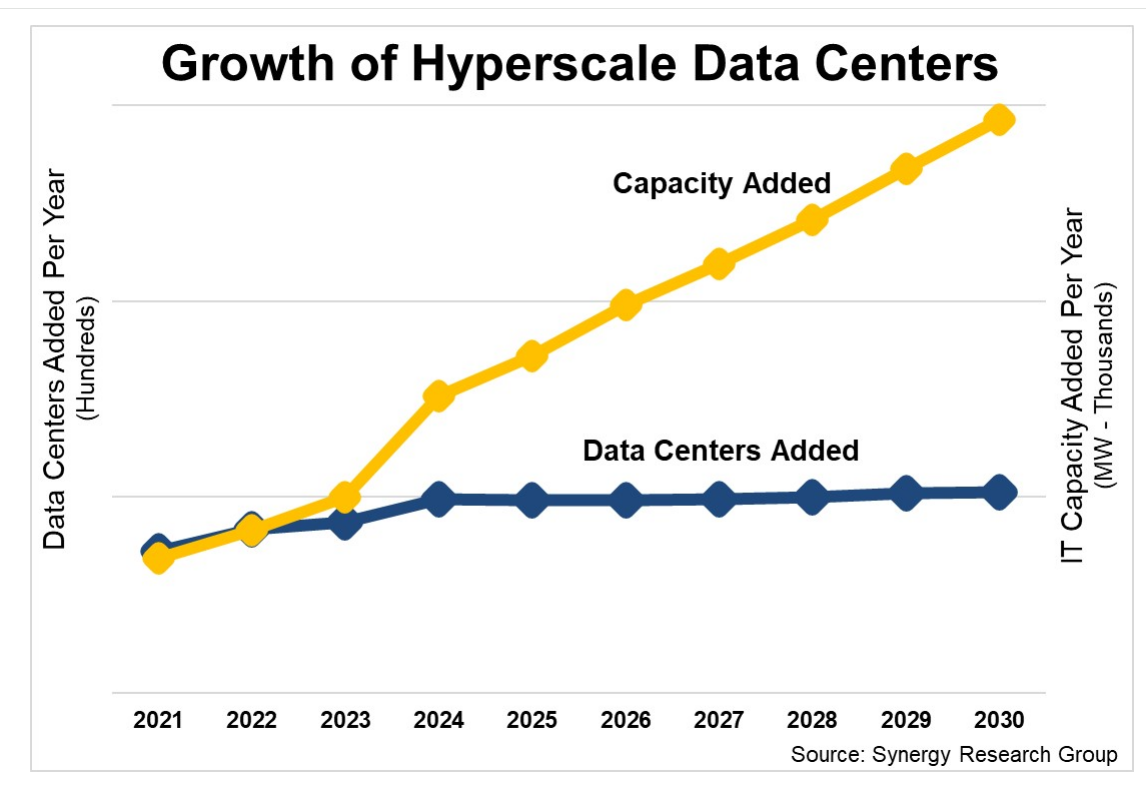

GenAI, which is where a lot of the excitement is coming from, is evolving from prompt-and-respond chatty interfaces to more sophisticated AI agents and reasoning models that are more thoughtful and can be entrusted to take actions. These models take more compute than blurty LLMs. In the run up to Nvidia’s GPU Technical Conference 2025 last month, IDC analysts released a report showing that AI infrastructure spending worldwide in the first half of 2024 grew to $47.4 billion, a 97 percent increase, and by 2028, it will be well beyond $200 billion. Subsequent to that, IDC said that the broader AI workloads are pushing server and storage spending to incredible highs.

Gartner piped up and cased on what server, storage, services, and software spending on GenAI looks like over the next few years.

A report by Arm last month outlined enterprises’ enthusiasm for AI – 82 percent of the 655 business leaders surveyed said their organizations are using AI applications – and lack of the required infrastructure: 80 percent have budgets put aside for AI, but only 29 percent said they have the infrastructure that can automatically scale to meet AI demands.

What the infrastructure will look like is still being worked out. As AI training and inference jobs expand, the infrastructure will have to include distributed computing, energy efficiency (there’s a lot of talk about liquid cooling), datacenters with modular designs, a mix of powerful GPUs and CPUs, and large memory capacities, according to Arm.

The OEMs Chase GenAI, Hope For Profits

System makers are pushing hard in that direction. That includes Cisco – and its Universal Computing System (UCS) converged server-switching systems – and Hewlett Packard Enterprise. Cisco at its Partner Summit last fall unveiled AI hardware offerings that leaned on its UCS and validated designs, and in February announced a deal with AI giant – and datacenter competitor – Nvidia to give organizations a broader range of AI networking options that include technology from both vendors.

Also, in February, HPE rolled out the latest editions to its Gen12 ProLiant servers that include Intel’s “Granite Rapids” Xeon 6 CPUs, following up the launch of the first systems in the family aimed at AI workloads and jammed with Nvidia’s newest GPUs.

And speaking of Nvidia, at GTC the company introduced what executives said was an exaflop-capable rack system of servers based on the company’s GB200 NVL72 system and targeting AI and similarly compute- and power-hungry workloads.

Dell Is Up Next

Dell has been working on enhancements to its PowerEdge servers and various storage and data protection offerings to make them better for AI, which are being announced now, and promises more coming next month at its Dell Technologies World 2025 conference.

The new products address what Varun Chhabra, senior vice president of product marketing for Dell’s ISG unit, said the top challenges enterprises are trying to manage. They’re looking for infrastructure to help them balance the need to run traditional workloads like virtual machines, databases, systems of record – think ERP and CRM – but also AI, edge applications, and containerized jobs.

“Looking across this estate requires organizations to think very differently about their infrastructure strategy,” Chhabra explains. “What we hear from customers most often is that they are trying to architect their technology to avoid vendor lock-in. It is no longer just about supporting virtual machine-based workloads. It’s about virtual machines, containers, and bare metal. They’re also looking at making sure that they get the most out of their technology investments so that there’s efficient resource utilization, not just from a cost perspective, but also from a power and real estate space perspective.”

Distributed Datacenters

As AI takes hold in the enterprise, businesses are seeing their datacenter become increasingly distributed and they need to ensure their AI workloads have access to all of their data in a consistent format regardless of where it’s located. There needs to be a shared data layer across the entire infrastructure, which needs to be able to dynamically scale to meet the myriad changing demands of the workloads, he said.

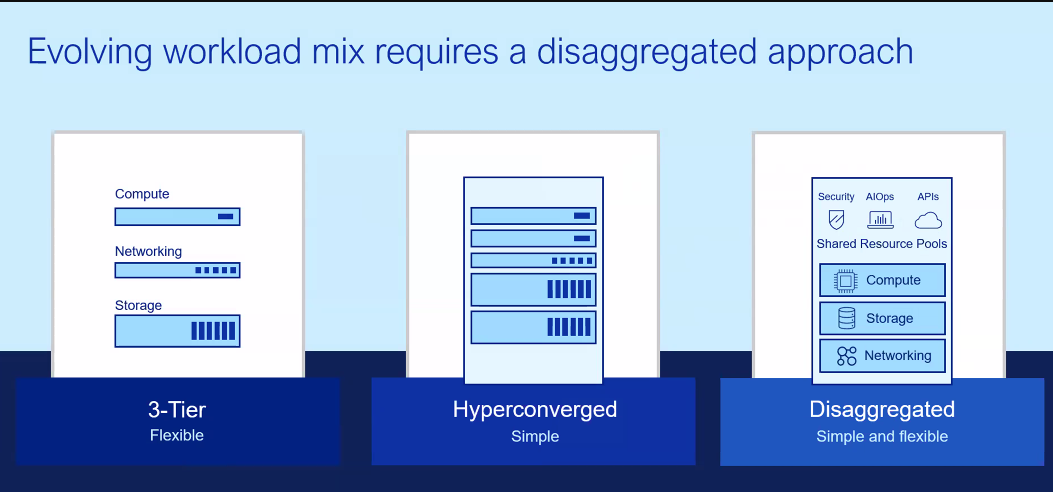

It’s what Dell calls a disaggregated architecture, where compute, storage, and networking are pulled together to create shared and adaptable resource pools that can be applied to any workload organizations need to run and that deliver better scalability and availability. Chhabra said disaggregation is an evolution of a three-tiered strategy – independent scaling of compute, storage, and network, which is complex and often multi-vendor – and hyperconverged (HCI), which is simpler but locks organizations into a single vendor or operating system, limiting needed flexibility for scaling or dynamic workloads.

“What disaggregated infrastructure is promising for customers is the ability to bring the flexibility of three-tier as well as the simplicity that they associate with HCI, bringing the best of those two worlds together to help customers support this rapid pace of change that they’re dealing with today,” Chhabra said, adding that what Dell unveiled at GTC – the PowerEdge XE8712 for large AI and HPC workloads and including Nvidia’s GB200 NVL2 architecture – and what’s planned for Dell Technologies World in May dovetail well with the news this week.

That news is heaving on compute and storage. The company is rolling out four new servers – the PowerEdge R470, R570, R570, and R770, all with Intel’s “Granite Rapids” Xeon 6 P-core processors for higher performance. The first two are single-socket systems, the last two dual-socket machines, and all are designed for both traditional and emerging workloads, including AI inferencing, HPC, virtualization, and analytics.

The benefits include system consolidation that frees up to 80 percent of space for each 42U rack with the R770, 3.35 times the performance of their predecessors, and 2.57 times the number of cores. They’re also based on the Data Center – Modular Hardware System (DC-MHS) architecture, a standard server design from the Open Compute Project that makes it easier to integrate into existing infrastructures.

Storage Gets Performance, Efficiency Boost

the X560 HDD-based systems accelerate key workloads like media ingest, backups and AI model training with 83 percent faster read throughput. ObjectScale XF960 delivers up to 2X greater throughput per node than the closest competitor and up to 8X greater density than previous generation all-flash systems.

The new offerings also include such capabilities multi-stie federation, copy-to-cloud, geo-replication, global namespace, and data governance to run secure AI data lakes. It’s supported by a hybrid cloud product developed with cloud provider Wasabi.

“The platform is also able to help both types of customers, those with new data lakes or existing data lakes, streamline their operations with an increase in performance, two times greater than the closest competitor,” says Martin Glynn, Dell’s senior director of product management for unstructured data, adding that the co-developed offering with Wasabi can offer enterprises significant efficiencies and advantages over more general public cloud uses. “This is an area that we see huge potential in object storage in general.”

Advancements in PowerScale scale-out storage include 122TB SSDs in a 2U node configuration that doubles all-flash capabilities and refreshed archive and hybrid nodes via the H710, H7100 (below), A310, and A3100 for improved latency and performance through a refreshed compute module for HDD-based platforms.

PowerStore systems are taking advantage of Dell’s AIOps software for AI-powered analytics and improved zero-trust security, while PowerProtect backup portfolio now includes the DD6410 system, which can hold 12 TB to 256 TB and provide 91 percent faster restores and the All-Flash Ready Node, with up to 220 TB more than 61 percent faster restore speeds.

Realted Posts

Simplifying Peripheral Management for the AI PC Era

April 9, 2025 0Invisible Prompt Injection: A Threat to AI Security

January 29, 2025 0Navigating Today’s Cloud Security Challenges

January 18, 2025 0

As Technovera Co., we officially partner with well-known vendors in the IT industry to provide solutions tailored to our customers’ needs. Technovera makes the purchase and guarantee of all these vendors, as well as the installation and configuration of the specified hardware and software.

Dell Tackles AI Infrastructure With Disaggregated Servers And Storage